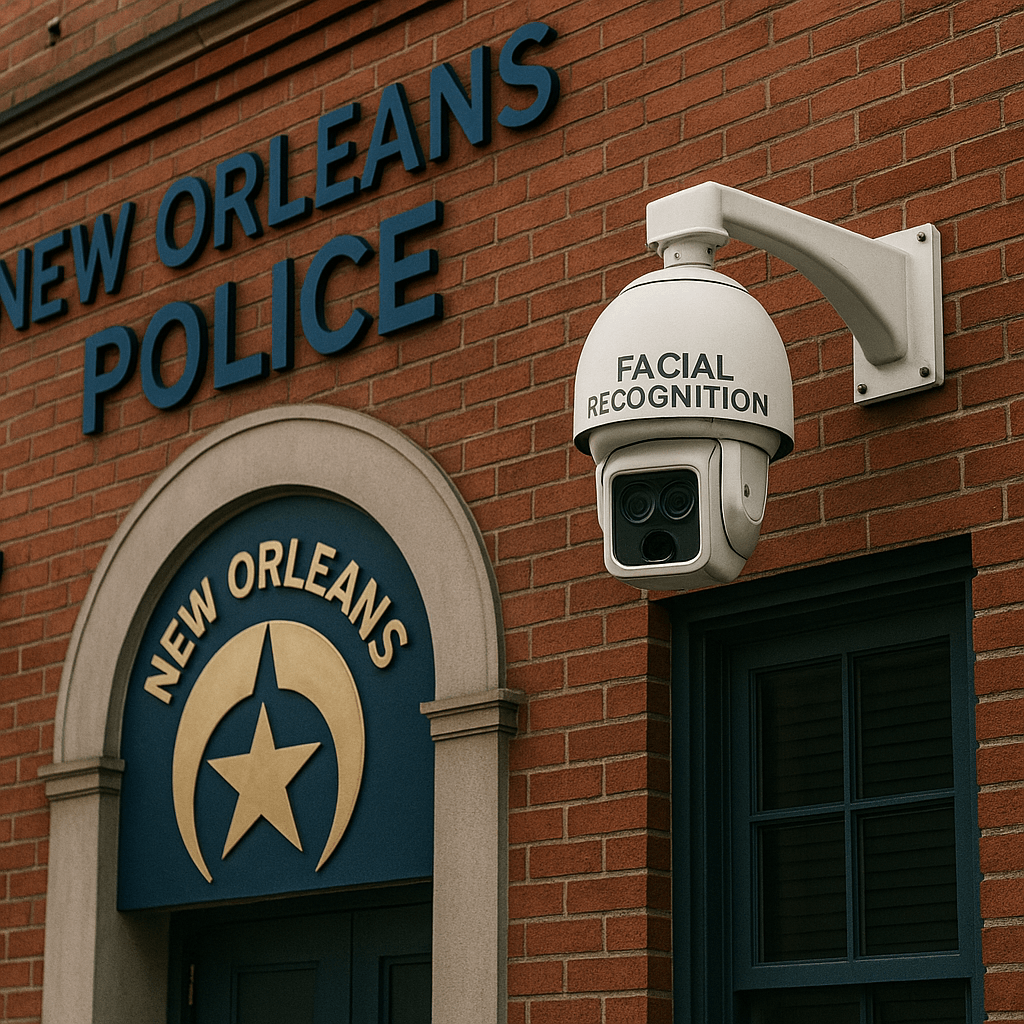

On May 19th, 2025, the New Orleans Police Department made headlines by abruptly halting its use of facial recognition alerts generated by privately operated surveillance cameras. This decision comes amid growing concern that the practice may have breached a 2022 city ordinance designed to limit AI-driven surveillance methods.

The department had been relying on data provided by Project NOLA, a community-led camera network originally intended to reduce crime through public-private collaboration. Over time, this network expanded its technological reach, integrating AI facial recognition software that could instantly identify individuals flagged by law enforcement databases. While the tool was praised by some for its crime-fighting potential, it quickly drew criticism from privacy advocates and legal experts. They argued that such use bypassed oversight mechanisms and potentially infringed on civil liberties protected under local law.

The ordinance in question, enacted three years earlier, explicitly prohibited the use of facial recognition technology by city agencies without proper legal procedures, citing risks of racial bias, wrongful identification, and a lack of transparency. The revelation that NOPD had continued to receive real-time alerts from Project NOLA cameras prompted immediate backlash from local officials and residents alike. In response, the department suspended the program indefinitely pending a full legal review.