Microsoft’s push into custom AI chips isn’t just another “faster hardware” announcement — it’s a sign that the cloud giants are starting to treat silicon as the new battleground for the AI era. For years, companies like Microsoft, Amazon, and Google could build massive businesses on top of someone else’s chips. But AI changes that math. When the cost of running models becomes one of your biggest expenses, “good enough” hardware stops being good enough.

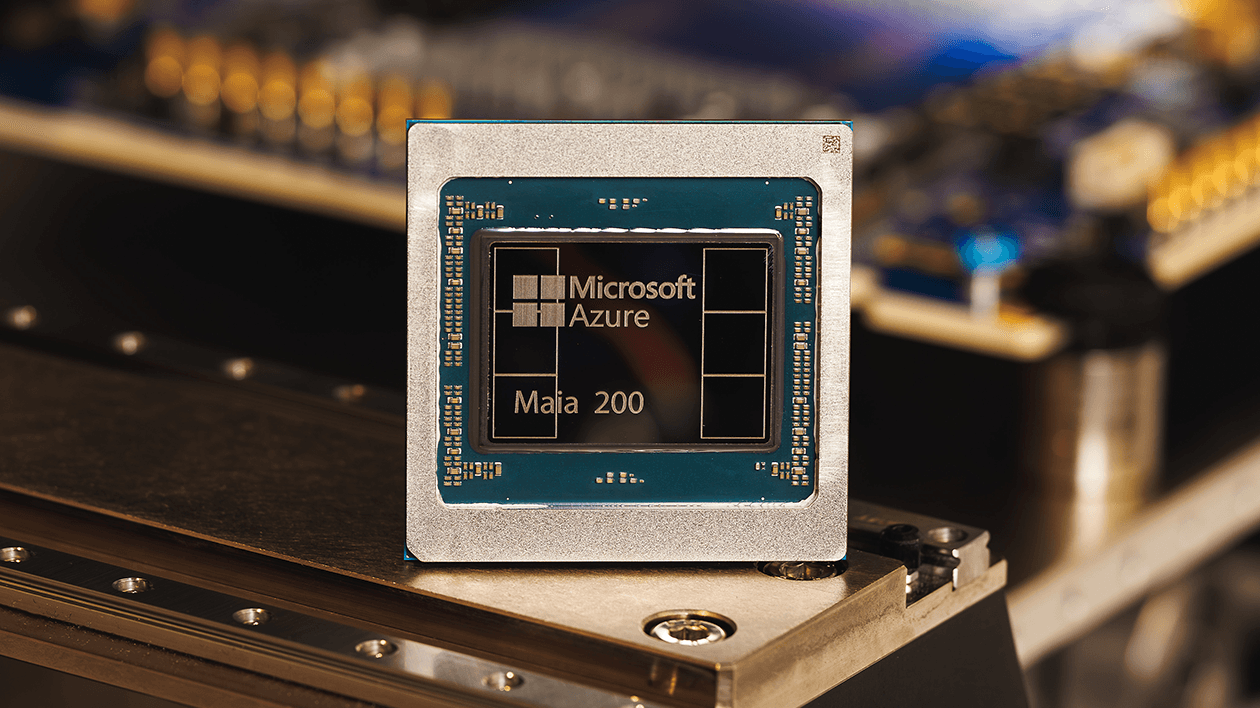

That’s why Microsoft’s Maia 200 matters. Not necessarily because it beats Amazon’s Trainium on one benchmark or edges out Google on another, but because it shows Microsoft is serious about owning more of the stack — from the chip, to the data center, to the models, to the products people actually use. Microsoft says Maia 200 is already powering workloads inside its Des Moines data center, and even running OpenAI’s GPT-5.2 models alongside Microsoft 365 Copilot and internal work from its Superintelligence team. That’s a pretty direct message: this isn’t a lab experiment, it’s infrastructure the company plans to scale.

What’s driving all of this is simple: AI is expensive to operate. Training a model is costly, but it’s a one-time hit. Serving it to millions of users, all day, every day, is the ongoing cost that can quietly eat margins alive. And that’s where custom chips shine. They’re not just about raw speed — they’re about performance-per-dollar, power efficiency, and tuning hardware specifically for inference (the day-to-day “running” of AI models once they’re trained).