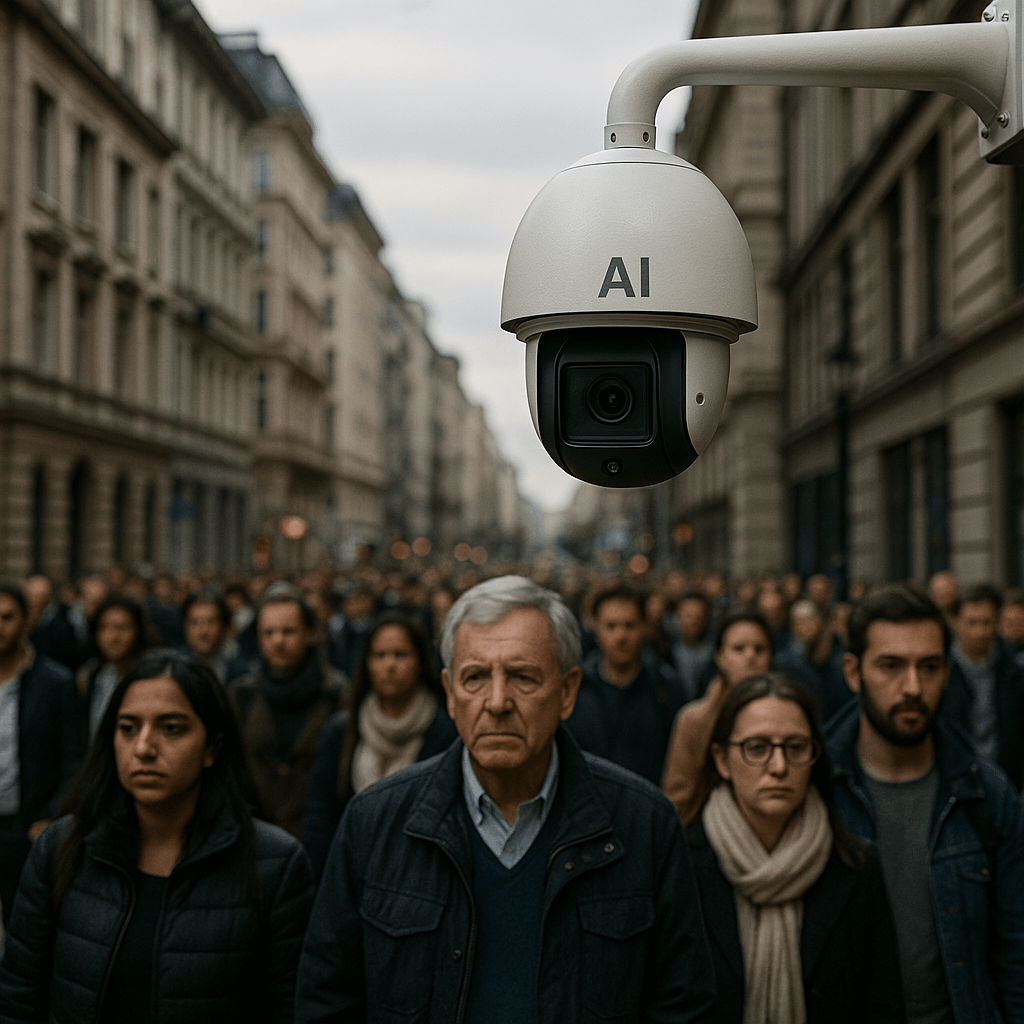

On May 19th, 2025, AI-powered surveillance systems are transforming security landscapes worldwide, offering unprecedented capabilities—but also igniting fresh privacy concerns. From city streets to corporate offices, new technologies use artificial intelligence to analyze vast amounts of video data in real time, identifying patterns, recognizing faces, and even predicting potential threats before they happen.

Governments and businesses hail these tools as breakthroughs in public safety and efficiency. Smart cameras can detect unusual behavior, monitor crowds, and streamline investigations faster than ever before. Yet, as these systems become more pervasive, privacy advocates warn that the line between protection and intrusion is blurring. The ability to track individuals constantly raises questions about consent, data security, and the potential for abuse.

Experts stress the urgent need for transparent regulations and ethical guidelines to keep AI surveillance in check. Without them, the risk of mass monitoring and erosion of civil liberties grows ever more real.

As AI sharpens its gaze, society faces a critical choice: harness these powerful tools responsibly, or risk surrendering privacy to a digital panopticon. The conversation is only just beginning—and the stakes have never been higher.